Author:

Martin McGarry

President and Chief Data Scientist

Big data has become an integral part of modern business operations. With 90% of the world’s data produced in the last two years, companies need to find efficient ways to manage and analyze data.

A modern data stack helps businesses gather, store, transform, and visualize information seamlessly. Unlike traditional systems that require on-premise hardware and complex maintenance, modern data solutions are faster, scalable, and more cost-effective.

Let’s explore what modern data stacks are, their core components, benefits, and how you can implement them for your organization.

What is a Modern Data Stack?

A modern data stack is a set of cloud-based tools that help businesses collect, store, and analyze large amounts of data efficiently. Unlike traditional data stacks that rely on on-premise hardware, the modern data stack uses cloud technologies, which makes them easier to manage than legacy data stacks.

It also helps businesses access data in real-time, allowing for faster decisions.

It stands out from traditional data management approaches with its flexibility, scalability, and efficiency. Businesses today need faster, cloud-based solutions to keep up with the growing volume of data.

Modern vs Traditional Data Stack

The modern data stack stands out from traditional data management approaches with its flexibility, efficiency, and growth potential. Businesses today need faster, cloud-based solutions to keep up with the growing volume of data.

Cloud-Based vs. On-Premise Infrastructure

One of the biggest differences is that the modern data stack is mainly cloud-based, while traditional data stacks rely on physical, on-premise servers. Cloud-based solutions like Databricks and Snowflake offer greater scalability and flexibility, allowing organizations to easily adjust data storage and processing power as needs grow.

Cloud-based computing means no more costly hardware upgrades or server maintenance. Plus, it also increased revenue for finance companies by up to 15%. With these tools, your business can scale up or down depending on your current data demands without downtime or extra expenses.

Modularity and Integration

A modern data stack is designed to be modular, which means you have the power to choose and integrate the best tools for your business needs. Whether it’s data ingestion, transformation, or analytics, you can mix and match cloud-based tools to build a custom stack.

For example, you might use Fivetran for ingestion, dbt for transformations, and Tableau for visualization. A modular approach allows your teams to focus on specific tasks without being locked into a rigid, one-size-fits-all system.

Traditional data stacks are more monolithic, meaning they often require all-in-one solutions that are harder to modify or upgrade. This lack of flexibility can make it difficult to adopt new technologies or scale quickly.

Real-Time Processing vs. Batch Processing

In traditional data stacks, processing was often done in batches, where businesses had to wait for periodic updates. The modern stack, on the other hand, supports real-time data processing, which enables companies to analyze data instantly.

One great example is Uber’s use of Apache Flink for real-time data streaming to match drivers with riders. Doing so helps the platform respond to rider demands in real-time, improving customer experience and operational efficiency.

Scalability and Cost-Effectiveness

Another main advantage of the modern data stack is its ability to scale. As mentioned, you only pay for the storage and processing you use with cloud-based tools. That’s why it’s a cost-effective solution for both small and large companies.

In contrast, traditional data stacks often require heavy upfront investments in hardware and infrastructure. Plus, there are additional costs for maintenance and upgrades.

Enhanced Data Governance and Security

The modern data stack includes advanced features like metadata management, role-based access control (RBAC), and encryption. These protect sensitive data and make sure your business complies with regulations like GDPR. Companies in regulated industries, such as healthcare and finance, greatly benefit from modern data stacks that provide encryption and data governance.

Ease of Use for Business Users

The modern data stack is more user-friendly, with many tools offering low-code or no-code interfaces. Now, non-technical users, like managers and CEOs, can access and work with data.

Traditional data stacks, on the other hand, often require a dedicated IT team to manage and maintain the system, creating bottlenecks in accessing data.

Core Components of a Modern Data Stack

Modern data stacks include data sources, storage, transformation, analytics, and visualization. These core components work together to create a comprehensive data ecosystem.

Data Sources

The modern stack starts with gathering data from various sources, including databases, SaaS platforms, IoT devices, social media, and customer interaction logs.

For example, a retail company might pull data from its CRM, sales platform, and website analytics to analyze customer behavior. It’s important for businesses to collect data from multiple sources since this provides a more comprehensive view of their operations.

Once you have your data sources, it’s time to transfer raw data from the source into a data warehouse or data lake. In the past, this was a manual, time-consuming task. But now, modern data ingestion tools automate retrieval by using an ELT (Extract, Load, Transform) approach.

Fivetran is a popular tool that automatically pulls data from hundreds of sources, such as Salesforce or Google Analytics, and loads it into a cloud warehouse like Databricks or Snowflake.

Another tool, Stitch, also simplifies the transferring process, allowing businesses to connect data sources quickly and monitor for errors or delays.

Data Storage

Data storage is the backbone of the entire modern data stack operation. Proper storage ensures that data is accessible when needed, which improves productivity and supports real-time analysis. Conversely, without a solid strategy, organizations can face slow data retrieval, higher costs, and difficulties in managing large datasets.

Platforms like Snowflake, Amazon Redshift, and Google BigQuery allow companies to store massive amounts of data while only paying for the storage and computing power they use. This level of flexibility offers both scalability and cost savings.

Data Lakes vs Data Warehouses vs Data Lakehouses

In the modern data stack, there are different storage options: data lakes, data warehouses, and the rising data lakehouses.

Data lakes store large volumes of raw, unstructured, and semi-structured data. They’re highly flexible but can become disorganized if not properly managed. Data lakes are ideal for storing a wide variety of data types, like social media posts, images, and sensor data.

Meanwhile, data warehouses are best used for structured data and fast querying. They’re the go-to for generating business insights from cleaned and structured datasets.

Lastly, data lakehouses are a newer option, combining the strengths of both data lakes and data warehouses. It can store raw data and also support fast SQL queries on structured data. This solution is gaining popularity because it allows businesses to handle all data types in one platform.

Data Transformation

Data transformation involves converting unstructured or inconsistent data into a clean and usable format that users can analyze easily. The goal is to keep data accurate, complete, and properly formatted.

By transforming data, businesses can perform efficient analytics and automate processes. For example, duplicates and errors can lead to inaccurate forecasts, which might cost companies a significant amount of time and money.

Data transformation involves data cleaning, normalization, and aggregation. Data cleaning involves spotting and correcting errors or inconsistencies. Meanwhile, normalization organizes data to keep it consistent and free from redundancy.

Finally, aggregating data means summarizing or grouping data to simplify analysis. This is especially useful when dealing with large datasets. For example, salespeople might aggregate data to analyze total monthly sales by region, making it easier for executives to view high-level trends.

Tools like dbt (Data Build Tool) and Dataform are popular because they help streamline the transformation stage. dbt allows data analysts to transform raw data in the cloud warehouse with simple SQL commands. It reduces the reliance on engineers, giving analysts more control over the data transformation process.

Similar to dbt, Dataform focuses on making data transformations easier to manage. It helps transform, clean, and prepare data for analysis while ensuring consistency across the board. It can integrate seamlessly with cloud platforms like BigQuery and Snowflake.

Data Analytics and Visualization

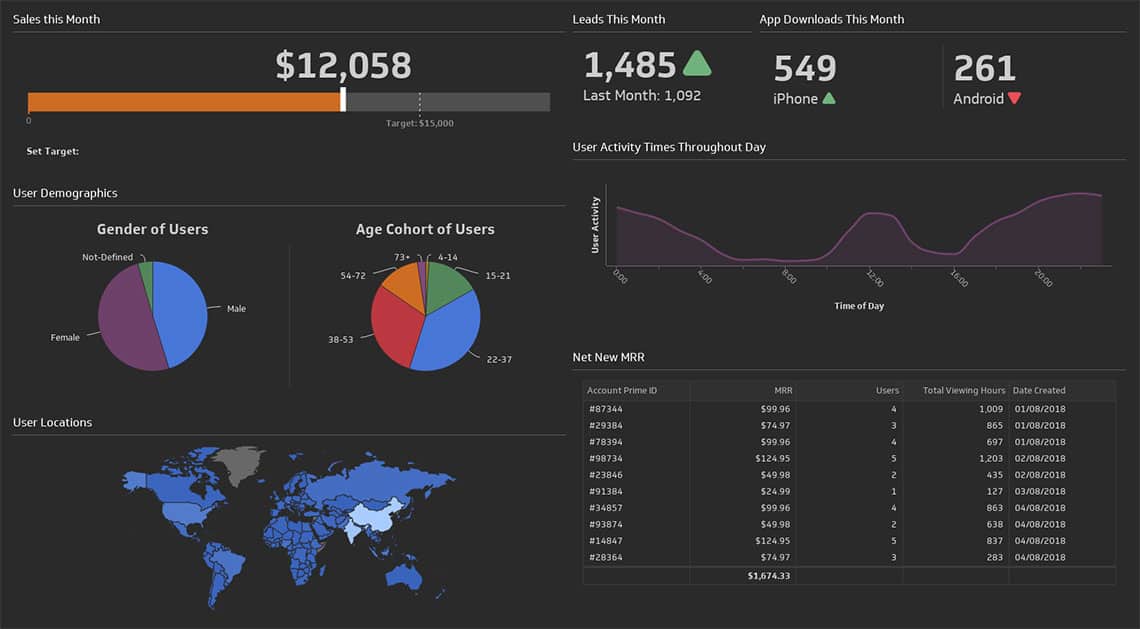

Data analytics and visualization are the final pieces of the modern data stack. In data analysis, you spot trends and patterns, while visualization presents these insights clearly. These help department heads and stakeholders quickly understand complex information.

Business intelligence (BI) tools such as Tableau, Power BI, and Klipfolio provide easy-to-understand visualizations that allow users to drill down into the data and extract meaningful insights, even for non-technical users.

BI tools can connect directly to cloud data warehouses, which lets businesses analyze large datasets efficiently. This integration guarantees that, as your data grows, your BI tools can still handle the load without performance issues.

Benefits of a Modern Data Stack

Modern data stacks make your data analytics more scalable with its cloud-based and modular nature. Consequently, they bring costs down because you don’t have to set up infrastructure and pay only for the resources you use.

Scalability and Flexibility

As businesses grow, the volume of data increases, and your stack must be able to handle that growth without compromising performance. The modern data stack can scale effortlessly because of its cloud-based architecture and modular design.

Additionally, cloud storage enhances collaboration across teams, as all data is stored in a central location accessible from anywhere. This flexibility makes cloud-based solutions a great option for companies looking to scale their data infrastructure without being bogged down by hardware limitations.

Cost Efficiency

Again, one of the key benefits of a data stack is the reduced upfront infrastructure costs. Traditionally, businesses had to invest heavily in physical servers, storage, and maintenance. These legacy systems required high upfront capital to purchase and maintain hardware, and they lacked the flexibility to scale with ease.

With modern cloud-based data systems, businesses can store their data on platforms like Snowflake, Databricks, or Amazon Redshift, where the provider handles storage and management.

As such, it eliminates the need for costly on-premise solutions, allowing organizations to direct resources elsewhere.

Cloud-based modern data stacks also typically operate on a pay-as-you-go model, which allows businesses to only pay for the data storage and processing power they actually use. It’s highly cost-efficient since it allows companies to avoid buying too many equipment or paying for unused capacity.

For example, during a marketing campaign, a business might need to filter a high volume of data. With the pay-as-you-go model, they can scale up their cloud resources to handle the increased load. Once the campaign is over, they can then scale back down, paying only for what they need without long-term commitments.

For CEOs, COOs, and managers, this translates into more predictable budgets and better resource allocation, which helps aid long-term growth and sustainability.

Improved Data Accessibility

Modern data stacks also enhance data accessibility for non-technical team members. They allow more people in your organization to work with data without needing advanced technical skills.

Before, data analysis required specialized knowledge of databases, SQL, or other programming languages. Now, thanks to user-friendly tools in a data stack, anyone from marketing to sales can access, analyze, and use data to make better decisions.

Accessibility fosters a data-driven culture, where decisions across all levels of an organization are based on data insights rather than intuition. Now, marketers can quickly pull campaign performance metrics, while finance can easily access financial projections.

To strengthen this culture, business leaders should prioritize democratizing data within their companies by implementing modern data tools that enable users to self-serve their data needs.

Self-service BI tools come in handy for non-technical users to generate reports, build dashboards, and gain insights without needing to write complex queries. Tableau and Klipfolio allow users to create drag-and-drop visualizations to explore trends and spot data patterns.

Implementing a Modern Data Stack in 5 Steps

Choosing the right data storage and making sure it integrates with your data sources are crucial in implementing a modern stack. You also have to consider the data transformation, visualization, and data governance processes.

Step 1: Choose Your Data Warehouse or Lakehouse

Finding the right cloud-based storage solution is the foundation of building a modern data stack. Your choice should depend on the specific needs of your organization, including the type of data you collect, how you plan to use it, and your growth requirements.

If you mainly work with structured data and need rapid reporting and analysis, a cloud-based data warehouse is likely the best choice.

On the other hand, if your company deals with use cases involving both structured and unstructured data, such as analyzing IoT data, logs, or social media content, a lakehouse is a better fit.

Step 2: Integrate Your Data Sources

Choose a platform that can integrate with your existing tools. This way, you won’t you won’t run into performance issues as your data volumes grow.

To get the most out of your data, you need a reliable and efficient data ingestion or transfer protocol. The right tools will allow you to extract data from multiple sources, transform it, and load it into your data warehouse or lakehouse.

Two popular options include Fivetran and Stitch, which provide easy-to-use, cloud-based platforms for the ingestion of data. These tools come with pre-built connectors that allow you to pull data from popular sources like Salesforce, Google Analytics, and AWS.

Step 3: Set Up Transformation Tools

Once you have your data, the next step is to clean and transform it to make it ready for analysis. This step makes sure your modern data stack processes data effectively. Raw data often contains errors, duplicates, and inconsistencies that must be resolved before providing meaningful insights.

Platforms like dbt (Data Build Tool) and Matillion tools allow you to automate transformation tasks such as data cleaning, normalization, and aggregation.

Set up automated workflows within your tool of choice to trigger transformations as soon as new data arrives. Doing so keeps modern data stack up to date and provides your business with real-time insights.

Step 4: Configure BI and Visualization Tools

After transforming data, you then display it using BI and visualization tools for analysis. Connect your data warehouse to the BI tool. Most allow you to complete the integration by just providing the required authentication credentials.

Once connected, import your transformed data into the BI tool’s workspace. These allow you to filter and segment data as needed before creating visualizations.

After importing data, you can start building visualizations. For example, a sales manager could create a dashboard displaying daily sales performance across regions.

Additionally, self-service BI tools are vital for 60% of research and development departments (R&D).

For instance, an R&D team at a consumer electronics company might use Klipfolio to analyze sensor data from their latest product prototype to find potential design flaws or track battery life, processing speed, and temperature fluctuations.

Meanwhile, a pharmaceutical company’s R&D team could use BI tools to analyze the results of clinical trials and monitor the progress of multiple research projects simultaneously.

Step 5: Incorporate Data Governance and Security

Ensuring data quality, compliance, and security at every stage protects sensitive information. Implementing robust data governance practices will help your organization maintain control and integrity over its data stack.

To maintain high-quality data, start by establishing rules for how it should be collected, cleaned, and used. Use tools to track transformations and maintain consistency across all your data sources. Regular audits of your stack will also help identify any inaccuracies or inconsistencies.

Additionally, use role-based access control (RBAC) to limit data access to authorized personnel. This prevents unauthorized users from handling sensitive data. Some tools provide features for tracking metadata to stay compliant with regulatory standards.

Don’t forget to implement end-to-end encryption across your entire stack. Set up regular security reviews and penetration tests to identify vulnerabilities. Monitor access logs and trace data lineage to ensure accuracy and prevent data silos.

Make the Switch to Modern

Still relying on a traditional data stack? It’s time for an upgrade. At Bronson AI, we specialize in helping businesses transition from outdated systems to modern cloud infrastructures.

Don’t wait until your current system becomes a bottleneck. Partner with us to future-proof your business.